Insights

High Performance Scripting in 3forge without the Complexities of Threading

3forge's AMI script now includes additional support for concurrency and pausability without the complexity of traditional thread-based development.

3forge's AMI script now includes additional support for concurrency and pausability without the complexity of traditional thread-based development.

3forge's AMI script now includes additional support for concurrency and pausability without the complexity of traditional thread-based development.

The performance advantages of multi-threading are critical for applications connecting to remote data sources and processing large data sets. New concurrency and pausability support in AMI Script aim to optimize code that does not return immediately, including remote system calls as well as code that processes large data sets. Concurrency and Pausability allow 3forge to implement behaviors that perform like multi-threading, but lack the complexity and support burden.

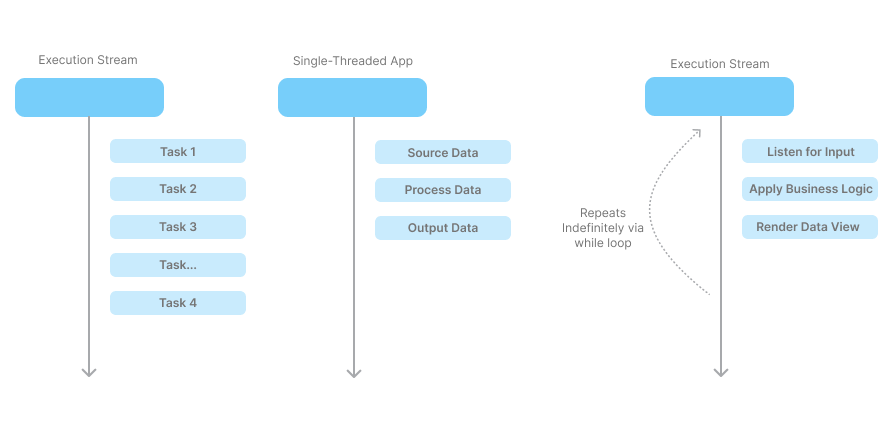

In Computer Science, a thread represents the processing of a single instruction stream by a processor (CPU). A single-threaded application is a contained set of instructions executed in a single stream. While some applications process and quickly terminate, other applications run indefinitely like a user interface (UI).

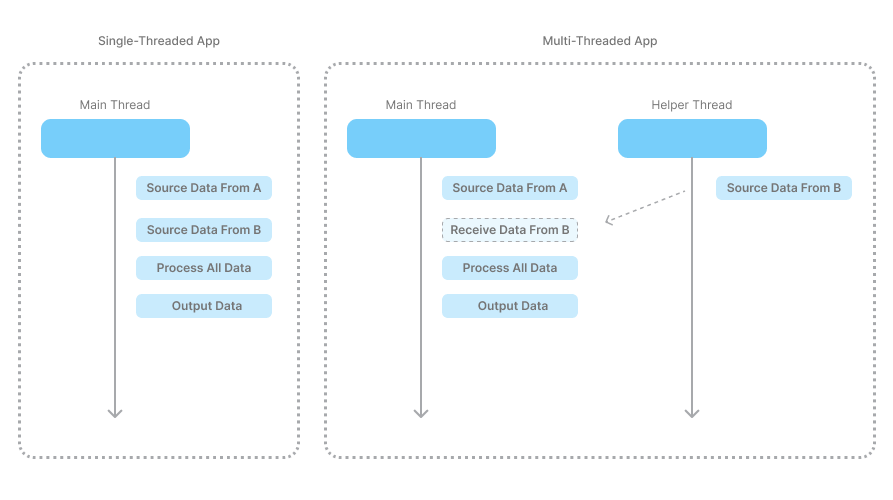

The term "Multi-Threading" comes into play when the computer is responsible for executing multiple instruction streams concurrently within an application. Threading is analogous to having one person writing a document versus multiple people. With one person, there is no need to coordinate updates or merge changes. With multiple people, the document may come together quicker, but the composition process requires coordination to prevent changes from being overwritten. And, further coordination is required to ensure that all updates are present before the document is complete.

| Term | Meaning |

|---|---|

| Synchronous |

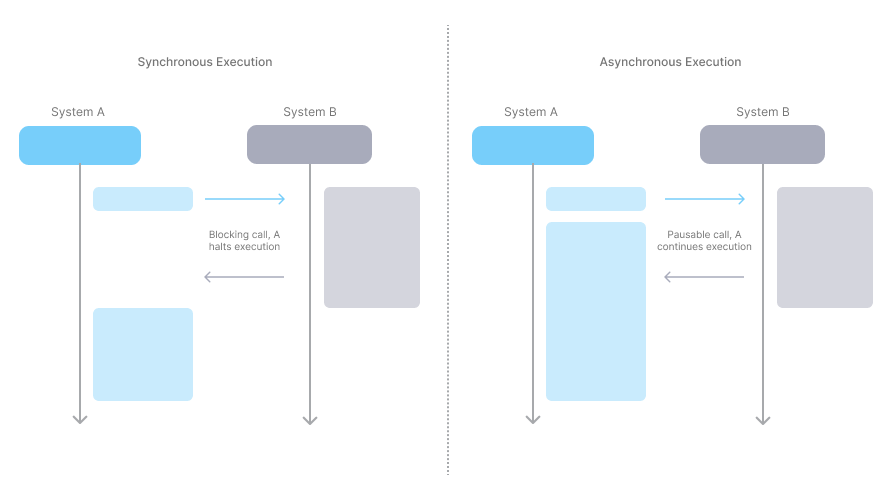

Synchronous execution is synonymous with single-threaded, sequential execution. In this scenario, a single thread works through various instructions, one at a time. Synchronization can also be used in programming to describe the forced coordination of multiple threads. |

| Asynchronous | Asynchronous execution is synonymous with multi-threaded execution that allows a single application to process multiple instructions concurrently. Heavy operations are offloaded from the main execution stream and handled by another thread. An asynchronous call returns immediately, performing no heavy lifting. |

| Blocking | Blocking is the halting of a thread's execution while it waits on another process or thread to complete a task. A blocking call is typically a heavy operation that takes time to complete and can significantly impact performance. |

| Cache | Caching is the process of temporarily storing data and making it available for quick access. Caches are often implemented with Maps for fast lookup and are commonly used in multi-threaded applications. |

| Concurrency | Concurrent processing is the ability to process multiple execution streams simultaneously. |

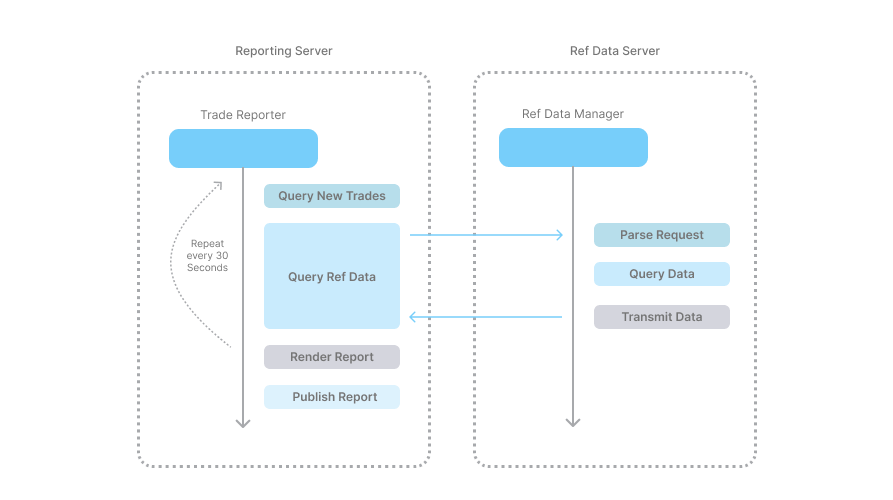

The Trade Reporter is a single-threaded process that builds a "new trades" report every 30 seconds, by:

The reporting process happens sequentially as part of a single instruction stream. The Trade Reporter makes a blocking call to a Reference Data Manager that is hosted on a separate server. The Reference Data Manager's threading model is not relevant. However, it is important to understand that it listens for reference data requests and then builds and transmits a response, which takes precious time that slows the Trade Reporter down.

The single-threaded version of the Trade Reporter runs well for a while, until trade volumes and reference data volumes increase significantly. The process has to make two remote system calls to (1) get trade data, and (2) get client reference data. If the firm is now trading with 3x the number of counterparties and producing 5x the volume of trades, these calls will take longer and put the Trade Reporter's ability to build the report in under 30 seconds at risk.

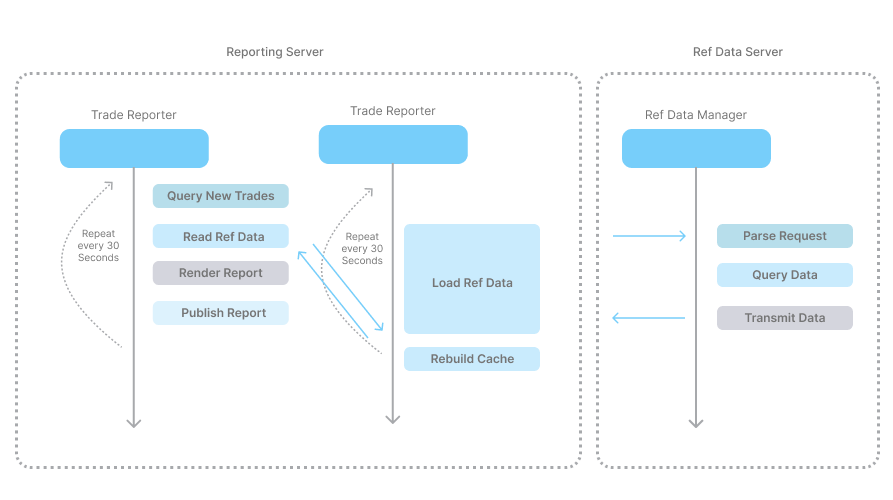

In part because reference data does not change as often as trade data, a decision is made to employ a separate thread to cache the reference data, making the results available for fast query from a local Map-based data cache. This approach minimizes blocking during the report generation process.

Since the blocking call to the reference data server has been removed from the main execution stream, the Trade Reporter speeds up significantly as the calls to the reference data cache return immediately. The report can now be produced easily within the 30 second interval.

So far, so good, but as with the previously mentioned example of multi person document creation, the reference data cache updates must be coordinated or "thread-safe" in tech speak. If there is no coordination, the reporter may end up querying against an empty or partially filled cache that is in the process of being rebuilt. Coding mechanisms such as semaphores and synchronized blocks can help with this by forcing one thread at a time to perform operations on the cache. As a result, a thread may need to wait until the resource is available. If written correctly, the waiting will be minimal, but the resulting code is significantly more complex versus the single-threaded variant.

| Scenario | Examples |

|---|---|

| External calls |

Calls to other running processes on the same or separate host machines, including:

|

| Input/Output (I/O) intensive operations |

|

| Intense Data process |

|

| Any of the above from within a User Interface or Latency sensitive process |

Any blocking call that can be invoked from an onChanged() method or similar

risks freezing the UI until the operation completes.

|

Asynchronous execution via pausable, coroutine statements has been a seamless part of AMI script for years now. Many developers may not have realized it, but certainly appreciate not having their user interface freeze up when sending an email or executing one or more large database queries.

Coroutines offer the ability to suspend and resume execution without the complexity of threading. 3forge's implementation adheres to the async/await pattern, which aims to allow code to resemble synchronous design, but ultimately allows for asynchronous execution without adding threads. Therefore, a statement in 3forge is declared like any other synchronous code block, but instead of immediate execution, it is instead prepared for execution and queued by the active thread.

Example code that should look familiar

Pausable Statements available in AMI Script

| AMI Script Pausable Statements |

|---|

| USE EXECUTE |

| waitMillis(..) [New, Coming Q2 25] |

| Session:sendEmail(..) |

| Session:callCommand(..) |

| Session:prompt(..) |

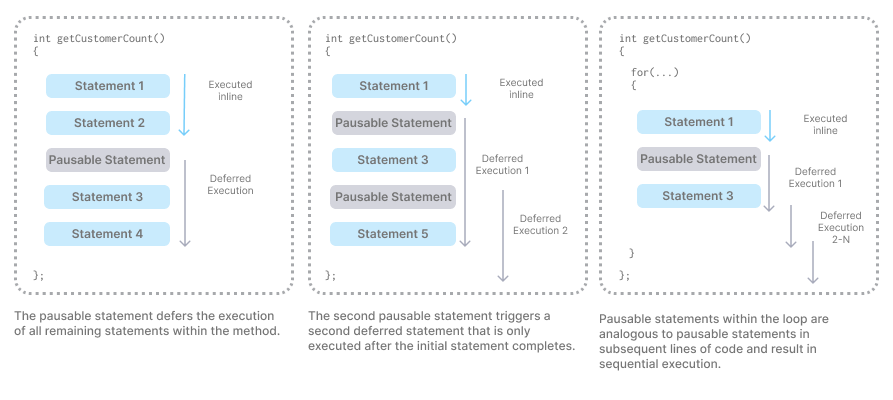

When a method contains a pausable statement, it is considered a "pausable formula". All statements before the deferred statement are executed inline. The deferred statements, including subsequent code, are queued for later execution. Without a "dispatch" block (more on this below), the deferred statement boundary is determined to be the end of the method. Thus, a method that has two deferred statements will still execute sequentially unless concurrent blocks are used.

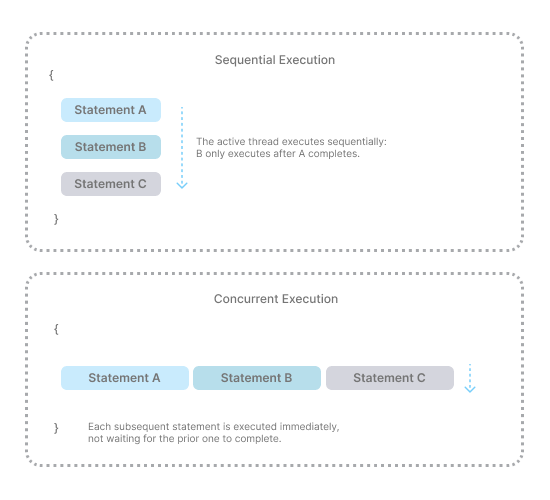

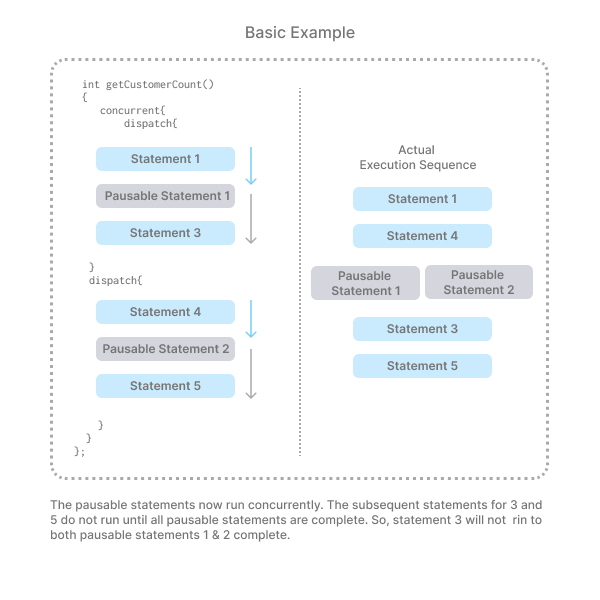

Concurrency allows multiple blocks of pausable code to be executed simultaneously. For example, if a method is required to query data from three data sources, a concurrent approach would allow for all sources to be queried at the same time and avoid "one after the other" sequential execution.

In 3forge, multiple pausable statements can be executed in parallel in a concurrent code block.

AMI Script Concurrent Control Blocks

| Element | Description |

|---|---|

| concurrent{} | Wraps a set of pausable statements that are executed in parallel |

| dispatch{} | Defines a pausable statement block. Must contain a pausable |

A dispatched block of code can fail and be isolated with appropriate error-handling, allowing the remaining streams of execution to complete and return.

While powerful in many circumstances, there are scenarios where pausable statements do not make sense, including scenarios where a block of code is repeatedly executed in a tight loop (e.g. a column filter). To prevent system performance bottlenecks, 3forge does not allow pausable statements to be executed in lambda scenarios, including SQL statements and Web UI formulas.

Pausable Statement Support by Context

| Category | Scenario | Allowed | Commentary |

|---|---|---|---|

| Web:Callback | User Interface Callback (e.g. Button::onChange()) | Yes | |

| Web::Callback | Data Model::onProcess() | Yes | |

| Web::Callback | Data Model::onProcess for a blender on a real-time Feed | Yes, but | Technically possible, but if the feed is high-volume, pausable statements are discouraged due to repeated event-processing loops. |

| Web:Formula | Real-time UI Table Where Filter | No | |

| Web:Formula | Table Column Formatter | No | |

| Center:User Function | Center Trigger | Yes, but | Code after deferred statements is currently unreachable (fix planned). |

| Center:User Function | Center Stored Procedure | Yes, but | Code after deferred statements is currently unreachable (fix planned). |

| Center:User Function | Center Timer | Yes, but | Code after deferred statements is currently unreachable (fix planned). |

| Relay:User Function | Relay Transformation Script | No |

Enrich Order Data with AMI Script

//Return a hash of order data with additional counterparty and instrument data

public Map hashEnrichedOrderData(List orders, List cptyRefDataSources){

Map ret = new Map();

Map instruments;

Map parties = new Map();

//load Instruments and Counterparty data sets in parallel

try {

Table tIns = USE DS="AMI" EXECUTE SELECT * FROM Instruments;

instruments = hashMapList(tIns.getRows(), "Symbol");

}

catch(Object errIns){

session.log("Error loading instruments: " + errIns);

instruments = new Map();

}

concurrent {

for(int i = 0; i < cptyRefDataSources.size(); i++) {

dispatch {

try {

List curDS = cptyRefDataSources.get(i);

String dsName = curDS.get(0);

String tableName = curDS.get(1);

Table tParties = USE DS="${dsName}" EXECUTE SELECT * FROM ${tableName};

parties.putAll(hashMapList(tParties.getRows(), "Account"));

}

catch(Object errParties){

session.log("Error looking up Counterparties: " + errParties);

}

}

}

}

//hash orders by OrderID add cpty and instrument entries

Iterator iter = orders.iterator();

while(iter.hasNext()){

Map curOrder = iter.next();

curOrder.put("cptyInfo", parties.get("Account"));

curOrder.put("instInfo", instruments.get("Symbol"));

ret.put(curOrder.get("OrderID"), curOrder);

}

return ret;

};

//hash a list of maps by a given key.

public Map hashMapList(List data, String key){

Map ret = new Map();

Iterator i = data.iterator();

while(i.hasNext()){

Map curItem = i.next();

ret.put(curItem.get(key), curItem);

}

return ret;

};

Written by Andy George

Solutions Architect